The Front End Developer/Engineer Handbook 2024

Written by Cody Lindley for Frontend Masters

This guide is open source, please go ⭐️ it on GitHub and make suggestions/edits there! https://github.com/FrontendMasters/front-end-handbook-2024

1. Overview of Field of Work

This section provides an overview of the field of front-end development/engineering.

1.1 — What is a (Frontend||UI||UX) Developer/Engineer?

A front-end developer/engineer uses Web Platform Technologies —namely HTML, CSS, and JavaScript— to develop a front-end (i.e., a user interface with which the user interacts) for websites, web applications, and native applications.

Most practitioners are introduced to the occupation after creating their first HTML web page. The most straightforward and simplest work output from a front-end developer/engineer is an HTML document that runs in a web browser, producing a web page.

Professional front-end developers broadly speaking produce:

- The front-end of Websites e.g., wikipedia.org - A website is a collection of interlinked web pages and associated multimedia content accessible over the Internet. Typically identified by a unique domain name, a website is hosted on web servers and can be accessed by users through a web browser. Websites serve various functions ranging from simple static web pages to complex dynamic web pages.

- The front-end of Web Applications e.g., gmail.com - Unlike native applications installed on a device, web applications are delivered to users through a web browser. They often interact with databases to store, retrieve, and manipulate data. Because web applications run in a browser, they are generally cross-platform and can be accessed on various devices, including desktops, laptops, tablets, and smartphones. Common development Libraries and frameworks in this space include React.js/Next.js, Svelte/SveltKit, Vue.js/Nuxt, SolidJS/SolidStart, Angular, Astro, Qwik, and Lit.

- The front-end of Native Applications from Web Technologies e.g., Discord - A native application from web technologies is a type of software application that runs natively on one or more operating systems (like Windows, macOS, Linux, iOS, and Android) from a single codebase of web technologies (including web application libraries and frameworks). Common development frameworks and patterns in this space include Electron for desktop apps React Native and Capacitor for mobile apps and even newer solutions like Tauri V2 that supports both mobile and desktop operating systems. Note that native applications built from web technologies either run web technologies at runtime (e.g., Electron, Tauri) or translate to some degree web technologies into native code and UI's at runtime (e.g., React Native, NativeScript). Additionally, Progressive Web Apps (PWAs) can also produce applications that are installable on one or more operating systems with native-like experiences from a single code base of web technologies.

1.2 — Common Job Titles (based on "Areas of Focus" in section 2)

Below is a table containing most of the front-end job titles in the wild organized by area of focus.

| Area of Focus | Common Job Titles |

|---|---|

| Website Development |

|

| Web Application Development / Software Engineering |

|

| Web UX / UI Engineering |

|

| Web Test Engineering |

|

| Web Performance Engineering |

|

| Web Accessibility Engineering |

|

| Web Game Development |

|

1.3 — Career Levels & Compensation

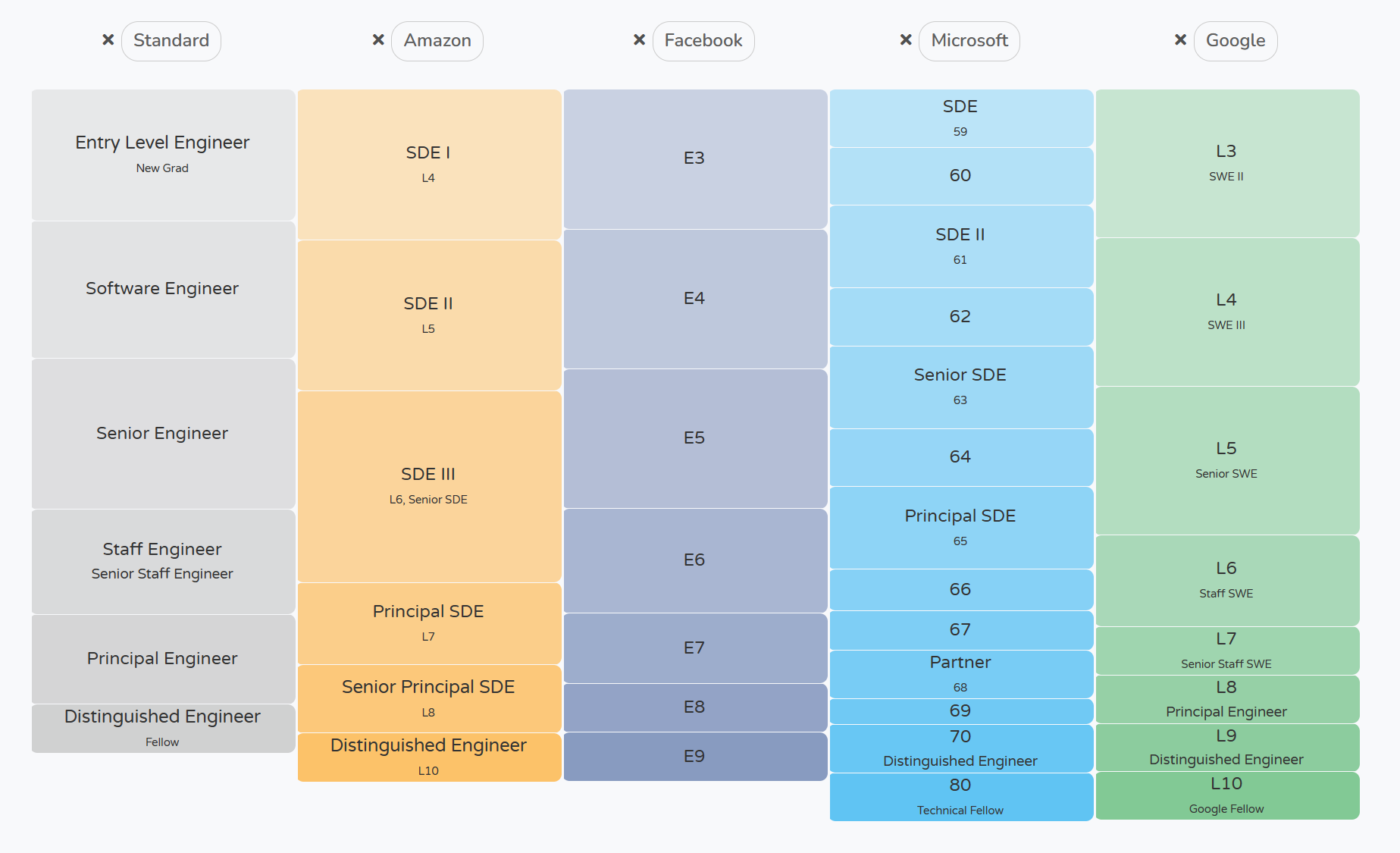

Roughly speaking (Frontend||UI||UX) developers/engineers advance in their career through the following ladder/levels and compensations.

| Level | Description | Compensation (USD) |

|---|---|---|

| Junior Engineer | Entry-level position. Focus on learning and skill development. Guided by senior members. | $40,000 - $80,000 |

| Engineer | Mid-level, 2-5 years of experience. Handles core development tasks and might take on more complex projects. | $80,000 - $100,000 |

| Senior Engineer | More than five years of experience. Handles intricate tasks and leads projects. | $100,000 - $130,000 |

| Lead Engineer | Leads teams or projects. Involved in technical decisions and architecture planning. | $130,000 - $160,000 |

| Staff Engineer | Long-term, high-ranking technical experts. Works on high-level architecture and design. | $150,000 - $180,000 |

| Principal Engineer | Highly specialized, often with a decade or more of experience. Influences company-wide technical projects. | $180,000 - $220,000 |

| Fellow / Distinguished Engineer | Sets or influences the technical direction at a company-wide level. Works on visionary projects. | $220,000 - $300,000 |

Note that companies typically use internal leveling semantics (e.g., level 66 from Microsoft).

Image source: https://www.levels.fyi/?compare=Standard,Amazon,Facebook,Microsoft,Google&track=Software%20Engineer

1.4 — Occupational Challenges

- The Front-end Divide: The "The Great Divide" in front-end web development describes a growing split between two main factions: JavaScript-centric full-stack web programmers, who focus on software frameworks and programming for web applications, and HTML/CSS-centric developers, who specialize in UI patterns, user experiences, interactions, accessibility, SEO, and the visual and structural aspects of web pages and apps. This divide exists between computer science-minded programmers, who prioritize programming/software skills required to build the front-end of web applications, and those who come to front-end development from the UI/UX side, typically as self-taught programmers. To be a front-end developer, you need to be a mix of both, with the degree of mixing being subjective. However, in 2024, it's clear that the job market heavily favors JavaScript-centric programmers, skilled in areas like JavaScript/TypeScript, Terminal/CLI, Node.js, APIs, GIT, Testing, CI/CD, Software Principles, Programming Principles, etc. (Follow up post: "The great(er) divide in front-end" and "Frontend design, React, and a bridge over the great divide"). However, the job market is only a reflection of the choices made in web development, not an evaluation of the quality of those choices.

- Technology Churn: Technology churn, the rapid evolution, and turnover of technologies, frameworks, and tools, present a significant challenge in the field of front-end development. This phenomenon can make the role both exciting and at the same time daunting and exhausting.

- Web Compatibility: Ensuring that web technologies work consistently across various web platform runtimes (e.g., web browsers, webviews, Electron, etc.) while not as complicated and challenging as it once was, can still require significant effort and skill.

- Cross-platform Development: Building a single codebase to run on multiple devices presents several challenges, especially in the context of front-end development. This approach, often referred to as cross-platform development, aims to create software that works seamlessly on various devices, such as smartphones, tablets, and desktops, with different operating systems like iOS, Android, and Windows.

- Responsive Design & Adaptive Design Development: Adaptive and responsive design are critical approaches in front-end development for creating websites and applications that provide an optimal viewing experience across a wide range of devices, from desktop monitors to mobile phones. However, implementing these solutions can often be complicated and time-consuming, leading to complicated code to maintain and test.

- Front-end Development is Too Complex: A general consensus is rising that the current frontend development practices and tools are too complex and need to be simplified. This strain is real and we are all feeling it, but not everyone is pointing at the same causes.

- Front-end Development Has Somewhat Lost its Way: Somewhere along the line, being a front-end developer transformed into being a CS-minded programmer capable of wrangling overly complex thick client UI frameworks to build software solutions in web browsers on potentially many different devices. In many ways, front-end development has lost its way. Once upon a time, front-end development primarily focused on the user and the user interface, with programming playing a secondary role. Why does being a front-end developer today mean one has to be more CS than UX? Because we have lost our way, we have accepted too much in the realm of complexity and forfeited our attention to less important matters. We are now somewhat stuck in a time of being all things and nothing. We have to find our way back to the user, back to the user interface.

- Challenges in Securing Employment: In recent times, securing a job has become a complex process, often marred by interviews that prioritize subjective and irrelevant criteria. These interviews frequently fail to assess skills pertinent to the actual job responsibilities, leading to a flawed hiring process. Technical roles, in particular, are frequently misunderstood, with assessments focusing on superficial generalizations rather than true technical acumen. Success in landing a job in this field often hinges more on chance or networking than on a comprehensive evaluation of an individual's personality, teamwork abilities, practical experience, communication prowess, and capacity for learning and critical thinking. Some of the most effective hiring practices involve companies acknowledging the inherent unpredictability of the hiring process and adopting a more holistic approach (i.e., selecting someone and engaging them in a small short contract of real work).

2. Areas of Focus

This section identifies and defines the major areas of focus within the field of front-end development / engineering.

2.1 — Website Development

Website Development in front-end development refers to building and maintaining websites. It involves creating both simple static web pages and complex web-based applications, ensuring they are visually appealing, functional, and user-friendly.

Key Responsibilities:

- Building and structuring websites using HTML, CSS, and JavaScript.

- Ensuring responsive design for various devices and screen sizes.

- Front-end programming for interactive and dynamic user interfaces.

- Implementing SEO optimization to improve search engine ranking.

- Enhancing website performance through various optimization techniques.

- Maintaining cross-browser compatibility.

- Adhering to web standards and accessibility guidelines.

Tools and Technologies:

- Proficiency with web development tools and languages like HTML, CSS, JavaScript.

- Familiarity with graphic design tools for website visuals.

- Using testing and debugging tools for website functionality and issue resolution.

Collaboration and Communication:

- Collaborating with designers, content creators, and other developers.

- Communicating with stakeholders to understand and implement web solutions.

Continuous Learning and Adaptation:

- Staying updated with the latest trends and standards in web development.

- Enhancing skills and adapting to new web development tools and methodologies.

2.2 — Web Application Development / Software Engineering

Web Application Development/Software Engineering in front-end development focuses on creating complex and dynamic web applications. This area encompasses the visual, interactive, architectural, performance, and integration aspects with back-end services of web applications.

Key Responsibilities:

- Building robust and scalable web applications using front-end technologies and modern frameworks.

- Designing the structure of web applications for modularity, scalability, and maintainability.

- Integrating front-end applications with back-end services and APIs.

- Optimizing web applications for speed and efficiency.

- Creating responsive designs for various devices and screen sizes.

- Ensuring cross-browser compatibility of web applications.

- Implementing security best practices in web applications.

Tools and Technologies:

- Expertise in front-end languages and frameworks such as HTML, CSS, JavaScript, React, Angular, Vue.js.

- Proficiency in using version control systems like Git.

- Familiarity with testing frameworks and tools for various types of testing.

Collaboration and Communication:

- Collaborating with UX/UI designers, back-end developers, and product managers.

- Effectively communicating technical concepts to team members and stakeholders.

Continuous Learning and Adaptation:

- Keeping up with the latest trends in web development technologies and methodologies.

- Continuously learning new programming languages, frameworks, and tools.

2.3 — Web UX / UI Engineering

Web UX/UI Engineering is a multifaceted area of focus in front-end development, dedicated to designing and implementing user-friendly and visually appealing interfaces for web applications and websites. This field integrates principles of UX design, UI development, Design Systems, and interaction design to create cohesive and effective web experiences.

Key Responsibilities:

- User Experience (UX) Design: Understanding user needs and behaviors to create intuitive web interfaces, including user research and journey mapping.

- User Interface (UI) Development: Coding and building the interface using HTML, CSS, and JavaScript, ensuring responsive and accessible designs.

- Design Systems: Developing and maintaining design systems to ensure consistency across the web application.

- Interaction Design: Creating engaging interfaces with thoughtful interactions and dynamic feedback.

- Collaboration with Designers: Working alongside graphic and interaction designers to translate visual concepts into functional interfaces.

- Prototyping and Wireframing: Utilizing tools for prototyping and wireframing to demonstrate functionality and layout.

- Usability Testing and Accessibility Compliance: Conducting usability tests and ensuring compliance with accessibility standards.

- Performance Optimization: Balancing aesthetic elements with website performance, optimizing for speed and responsiveness.

Tools and Technologies:

- Design and Prototyping Tools: Proficient in tools like Adobe XD, Sketch, or Figma for UI/UX design and prototyping.

- Front-end Development Languages and Frameworks: Skilled in HTML, CSS, JavaScript, and frameworks like React, Angular, or Vue.js.

- Usability and Accessibility Tools: Using tools for conducting usability tests and ensuring accessibility.

Collaboration and Communication:

- Engaging with cross-functional teams including developers, product managers, and stakeholders.

- Communicating design ideas, prototypes, and interaction designs to align with project goals.

Continuous Learning and Adaptation:

- Staying updated with the latest trends in UX/UI design, interaction design, and front-end development.

- Adapting to new design tools, technologies, and methodologies.

2.4 — Web Test Engineering

Test Engineering, within the context of front-end development, involves rigorous testing of web applications and websites to ensure functionality, performance, coding, and usability standards. This area of focus is crucial for maintaining the quality and reliability of web products.

Key Responsibilities:

- Developing and Implementing Test Plans: Creating comprehensive test strategies for various aspects of web applications.

- Automated Testing: Using automated frameworks and tools for efficient testing.

- Manual Testing: Complementing automated tests with manual testing approaches.

- Bug Tracking and Reporting: Identifying and documenting bugs, and communicating findings for resolution.

- Cross-Browser and Cross-Platform Testing: Ensuring consistent functionality across different browsers and platforms.

- Performance Testing: Evaluating web applications for speed and efficiency under various conditions.

- Security Testing: Assessing applications for vulnerabilities and security risks.

Tools and Technologies:

- Testing Frameworks and Tools: Familiarity with tools like Selenium, Jest, PlayWright, and Cypress.

- Bug Tracking Tools: Using tools like JIRA, Bugzilla, or Trello for bug tracking.

Collaboration and Communication:

- Working with developers, designers, and product managers to ensure comprehensive testing.

- Communicating test results, bug reports, and quality metrics effectively.

Continuous Learning and Adaptation:

- Staying updated with the latest testing methodologies and tools.

- Adapting to new technologies and frameworks in the evolving field of web development.

2.5 — Web Performance Engineering

Web Performance Engineering is a specialized area within front-end development focused on optimizing the performance of websites and web applications. This field impacts user experience, search engine rankings, and overall site effectiveness. The primary goal is to ensure web pages load quickly and run smoothly.

Key Responsibilities:

- Performance Analysis and Benchmarking: Assessing current performance, identifying bottlenecks, and setting benchmarks.

- Optimizing Load Times: Employing techniques for quicker page loads.

- Responsive and Efficient Design: Optimizing resource usage in web designs.

- Network Performance Optimization: Improving data transmission over the network.

- Browser Performance Tuning: Ensuring smooth operation across different browsers.

- JavaScript Performance Optimization: Writing efficient JavaScript to enhance site performance.

- Testing and Monitoring: Regularly testing and monitoring for performance issues.

Tools and Technologies:

- Performance Testing Tools: Using tools like Google Lighthouse and WebPageTest.

- Monitoring Tools: Utilizing tools for ongoing performance tracking.

Collaboration and Communication:

- Working with web developers, designers, and backend teams for integrated performance considerations.

- Communicating the importance of performance to stakeholders.

Continuous Learning and Industry Trends:

- Staying updated with web performance optimization techniques and technologies.

- Keeping pace with evolving web standards and best practices.

2.6 — Web Accessibility Engineering

A Web Accessibility Engineer is tasked with ensuring that web products are universally accessible, particularly for users with disabilities. Their role encompasses a thorough understanding and implementation of web accessibility standards, the design of accessible user interfaces, and rigorous testing to identify and address accessibility issues.

Key Responsibilities:

- Mastery of the Web Content Accessibility Guidelines (WCAG) is essential.

- Involves designing and adapting websites or applications to be fully usable by people with various impairments.

- Conducting regular assessments of web products to pinpoint and rectify accessibility obstacles.

Tools and Technologies:

- Utilization of screen readers, accessibility testing tools, and browser-based accessibility tools.

- Application of HTML, CSS, ARIA tags, and JavaScript in developing accessible web designs.

Collaboration and Advocacy:

- Engaging in teamwork with designers, developers, and stakeholders.

- Championing the cause of accessibility and universal web access.

Continuous Learning and Updates:

- Staying current with the latest developments in accessibility standards and technology.

- Enhancing skills and knowledge to tackle new accessibility challenges.

Legal and Ethical Considerations:

- Understanding legal frameworks like the Americans with Disabilities Act (ADA).

- Upholding an ethical commitment to digital equality and inclusivity.

2.7 — Web Game Development

Web Game Development involves creating interactive and engaging games that run directly in web browsers. This area of focus is distinct from traditional game development primarily due to the technologies used and the platform (web browsers) on which the games are deployed.

- Technologies and Tools - Web game developers often use HTML, CSS, and JavaScript as the core technologies. HTML allows for more interactive and media-rich content, essential for game development. JavaScript is used for game logic and dynamics, and WebGL is employed for 2D and 3D graphics rendering.

- Frameworks and Libraries - Several JavaScript-based game engines and frameworks facilitate web game development. Examples include Phaser for general purposes, Three.js for 3D games, and Pixi.js for 2D games.

- Game Design - Web game development involves game design elements like storyline creation, character design, level design, and gameplay mechanics. The developer needs to create an engaging user experience within the constraints of a web browser.

- Performance Considerations - Developers must optimize games for performance, ensuring quick loading, smooth operation, and responsiveness. Techniques include using spritesheet animations and minimizing heavy assets.

- Cross-Platform and Responsive Design - Games must work well across different browsers and devices, requiring a responsive design approach and thorough testing on various platforms.

- Monetization and Distribution - Web games can be monetized through in-game purchases, advertisements, or direct sales. They are accessible directly through a web browser without downloads or installations.

- Community and Support - The web game development community is vibrant, with numerous forums, tutorials, and resources available for developers at all levels.

Web game development, as an area of focus in front-end development, combines creativity in game design with technical skills in web technologies, offering a unique and exciting field for developers interested in both gaming and web development.

3. Learning / Education / Training

This section provides first step resources for those first learning about the field of front-end development as well as resources for those committed to becoming a professional.

3.1 — Initial Steps

Before committing long term to a subscription, certification, or a formal education, one should investigate the field of front-end development.

Here are several free resources to consume to get a sense of the technologies, tools, and scope of knowledge required to work as a front-end developer/engineer:

- WebGlossary.info

- Getting started with the web and Front-end web developer on MDN

- Learn HTML on web.dev, Learn CSS on web.dev

- HTML & CSS, JavaScript from Code Academy

- Free Boot Camp from Frontend Masters

- Web Development for Beginners - A Curriculum from Microsoft

- Complete Intro to Web Development, v3 from Frontend Masters

- The Valley of Code

- Frontend Developer Roadmap and Frontend Developer Roadmap (Beginner Version)

3.2 — On Demand Courses

On-demand courses are ideal for those who prefer to learn at their own pace and on their own schedule. They are also a great way to supplement other learning methods, such as in-person classes or self-study.

-

Frontend Masters:

- Description: Frontend Masters is a specialized learning platform focusing primarily on web development. It has courses and learning paths on all the most important front-end and fullstack technologies.

- Target Audience: Primarily aimed at professional web developers and those looking to deepen their understanding of front-end technologies. The content ranges from beginner to advanced levels.

- Key Features: Offers workshops and courses taught by industry experts, provides learning paths, and includes access to a community of developers. The platform is known for its high-quality, detailed courses on all the key technologies and aspects of front-end development.

-

Code Academy:

- Description: Codecademy is a popular online learning platform that offers interactive courses on a wide range of programming languages and technology topics, including web development, data science, and more.

- Target Audience: Suitable for beginners and intermediate learners who prefer a more interactive, hands-on approach to learning coding skills.

- Key Features: Known for its interactive coding environment where learners can practice code directly in the browser. Offers structured learning paths, projects, and quizzes to reinforce learning.

-

LinkedIn Learning

(formerly Lynda.com):

- Description: LinkedIn Learning provides a broad array of courses covering various topics, including web development, graphic design, business, and more. It integrates with the LinkedIn platform, offering personalized course recommendations.

- Target Audience: Ideal for professionals looking to expand their skill set in various areas, not just limited to web development.

- Key Features: Offers video-based courses with a more general approach to professional development. Learners get course recommendations based on their LinkedIn profile, and completed courses can be added to their LinkedIn profile.

-

O'Reilly Learning

(formerly Safari Books Online):

- Description: O'Reilly Learning is a comprehensive learning platform offering books, videos, live online training, and interactive learning experiences on a wide range of technology and business topics.

- Target Audience: Suitable for professionals and students in the technology and business sectors who are looking for in-depth material and resources.

- Key Features: Extensive library of books and videos from O'Reilly Media and other publishers, live online training sessions, and case studies. Known for its vast collection of resources and in-depth content.

3.3 — Certifications & Learning Paths

Certifications and learning paths are ideal for those who prefer a more structured curriculum or are looking to gain a more formal qualification. Note that certifications in front-end development aren't taken as seriously as they are in other industries and professions, but they can still be valuable for demonstrating knowledge and skills.

- Meta Front-End Developer Professional Certificate from Coursera.

- Undergraduate Introduction to Web Development Certificate from Harvard Extension School

- Professional Certificate in Front-End Web Developer from edX

- Front End Web Developer Nanodegree Program from Udacity

- Front-End Web Developer Short Course from General Assembly

- Beginner Web Development Path and Senior Web Developer Path from Frontend Masters

- The Frontend Developer Career Path from Scrimba

- Front End Web Development Treehouse Techdegree from Treehouse

3.4 — University/College Educations

In the realm of higher education, front-end development is typically encompassed within more extensive academic disciplines. Majors such as Computer Science, Information Technology, and Web Development often integrate front-end development as a vital component of their curriculum.

4. Foundational Aspects

This section identifies and defines the foundational aspects of the environment in which front-end web development takes place.

4.1 — World Wide Web (aka, WWW or Web)

The World Wide Web, commonly known as the Web, is a system of interlinked hypertext documents and resources. Accessed via the internet, it utilizes browsers to render web pages, allowing users to view, navigate, and interact with a wealth of information and multimedia. The Web's inception by Tim Berners-Lee in 1989 revolutionized information sharing and communication, laying the groundwork for the modern digital era.

Learn more:

- How the web works on MDN

- The web

4.2 — The Internet

The Internet is a vast network of interconnected computers that spans the globe. It's the infrastructure that enables the World Wide Web and other services like email and file sharing. The Internet operates on a suite of protocols, the most fundamental being the Internet Protocol (IP), which orchestrates the routing of data across this vast network.

Learn more:

- Internet Fundamentals from Frontend Masters

- How does the Internet work? on MDN

- The Internet

4.3 — IP (Internet Protocol) Addresses

IP Addresses serve as unique identifiers for devices on the internet, similar to how a postal address identifies a location in the physical world. They are critical for the accurate routing and delivery of data across the internet. Each device connected to the internet, from computers to smartphones, is assigned an IP address.

There are two main types of IP address standards:

- IPv4 (Internet Protocol version 4): This is the older and most widely used standard. IPv4 addresses are 32 bits in length, allowing for a theoretical maximum of about 4.3 billion unique addresses. They are typically represented in decimal format, divided into four octets (e.g., 192.0.2.1).

- IPv6 (Internet Protocol version 6): With the rapid growth of the internet and the exhaustion of IPv4 addresses, IPv6 was introduced. IPv6 addresses are 128 bits long, greatly expanding the number of available addresses. They are expressed in hexadecimal format, separated by colons (e.g., 2001:0db8:85a3:0000:0000:8a2e:0370:7334). This standard not only addresses the limitation of available addresses but also improves upon various aspects of IP addressing, including simplified processing by routers and enhanced security features.

Both IP address standards are essential in the current landscape of the internet. While IPv4 is still predominant, the transition to IPv6 is gradually taking place as the need for more internet addresses continues to grow, driven by the proliferation of internet-connected devices.

4.4 — Domain Names

Domain names serve as the intuitive, human-friendly identifiers for websites on the internet, translating the technical Internet Protocol (IP) addresses into easily memorable names. Essentially, they are the cornerstone of web navigation, simplifying the process of finding and accessing websites.

For instance, a domain name like 'example.com' is far more recognizable and easier to remember than its numerical IP address counterpart. This user-friendly system allows internet users to locate and visit websites without needing to memorize complex strings of numbers (i.e.. IP Addresses). Each domain name is unique, ensuring that every website has its distinct address on the web.

The structure of domain names is hierarchical, typically consisting of a top-level domain (TLD) such as '.com', '.org', or '.net', and a second-level domain which is chosen by the website owner. The combination of these elements forms a complete domain name that represents a specific IP address.

Domain names not only facilitate ease of access to websites but also play a crucial role in branding and establishing an online identity for businesses and individuals alike. In the digital age, a domain name is more than just an address; it's a vital part of one's online presence and digital branding strategy.

Learn more:

- What is a domain name? on MDN

4.5 — DNS (Domain Name System)

The Domain Name System (DNS) is the internet's equivalent of a phone book. It translates user-friendly domain names (like www.example.com) into IP addresses that computers use. DNS is crucial for the user-friendly navigation of the internet, allowing users to access websites without needing to memorize complex numerical IP addresses.

Learn more:

4.6 — URLs (Uniform Resource Locators)

Uniform Resource Locators (URLs) are the addresses used to access resources on the internet. A URL specifies the location of a resource on a server and the protocol used to access it. It typically includes a protocol (like HTTP or HTTPS), a domain name, and a path to the resource.

Learn more:

- Guide to URLs on MDN

4.7 — Servers and Web Hosting

Servers, the powerhouses of the digital world, are specialized computers designed to process requests and distribute data over the internet and local networks. These robust machines form the backbone of the digital ecosystem, supporting everything from website hosting to the execution of complex applications.

Web hosting, a crucial service in the online sphere, entails the management and provision of server infrastructure alongside reliable internet connectivity. Essential for the uninterrupted operation of websites and online applications, web hosting offers a wide range of solutions tailored to meet diverse operational needs and scales. Whether for a personal blog or a large enterprise website, the array of web hosting options ensures a perfect fit for every unique requirement and goal.

- Shared Hosting: An economical choice where resources on a single server are shared among multiple clients. Best suited for small websites and blogs, it's budget-friendly but offers limited resources and control.

- VPS (Virtual Private Server) Hosting: Strikes a balance between affordability and functionality. Clients share a server but have individual virtual environments, providing enhanced resources and customization possibilities.

- Dedicated Server Hosting: Offers exclusive servers to clients, ensuring maximum resource availability, top-notch performance, and heightened security. Ideal for large businesses and websites with heavy traffic.

- Cloud Hosting: A versatile and scalable solution that utilizes a network of virtual servers in the cloud. It allows for resource scaling to match varying traffic needs, making it perfect for businesses with dynamic traffic patterns.

Selecting the appropriate web hosting solution is influenced by several factors, including business size, budget constraints, traffic levels, and specific technological needs. The continual advancements and diversification in server hosting technology empower businesses of all sizes to effectively establish and enhance their online footprint.

Learn more:

- What is a web server? on MDN

- Everything You Need To Know About Web Hosting

- Full Stack for Front-End Engineers, v3 from Frontend Masters

4.8 — CDN (Content Delivery Network)

A Content Delivery Network (CDN) represents a pivotal advancement in content distribution technologies. It is an extensive network of servers strategically dispersed across various geographical locations. This network collaborates seamlessly to accelerate the delivery of internet content to users worldwide.

By caching content like web pages, images, and video streams on multiple servers located closer to the end-users, CDNs significantly minimize latency. This setup is particularly beneficial for websites with high traffic volumes and online services with a global user base. The proximity of CDN servers to users ensures faster access speeds, enhancing the overall user experience by reducing loading times and improving website performance.

Beyond speed enhancement, CDNs also contribute to load balancing and handling large volumes of traffic, thereby increasing the reliability and availability of websites and web services. They effectively manage traffic spikes and mitigate potential bottlenecks, ensuring consistent content delivery even during peak times.

In today's digital landscape, where speed and reliability are paramount, the use of CDNs has become an integral part of web infrastructure for businesses seeking to optimize their online presence and provide a superior user experience.

Learn more:

4.9 — HTTP/HTTPS (Hypertext Transfer Protocol/Secure)

HTTP (HyperText Transfer Protocol) and HTTPS (HTTP Secure) are foundational protocols used for the transfer of information on the internet. HTTP forms the basis of data communication on the World Wide Web, whereas HTTPS adds a layer of security to this communication.

Key Aspects of HTTP and HTTPS:

- Basic Function: HTTP is designed to enable communication between web browsers and servers. It follows a request-response structure where the browser requests data, and the server responds with the requested information.

- Security with HTTPS: HTTPS is essentially HTTP with encryption. It uses SSL/TLS protocols to encrypt the data transferred between the browser and the server, enhancing security and protecting sensitive information from interception or tampering.

- Port Numbers: By default, HTTP uses port 80 and HTTPS uses port 443. These ports are used by web servers to listen for incoming connections from web clients.

- URL Structure: In URLs, HTTP is indicated by 'http://' while HTTPS is indicated by 'https://'. This small difference in the URL signifies whether the connection to the website is secured with encryption or not.

Differences and Usage:

- Data Security: The most significant difference is security. HTTPS provides a secure channel, especially important for websites handling sensitive data like banking, shopping, or personal information.

- SEO and Trust: Search engines like Google give preference to HTTPS websites, considering them more secure. Also, web browsers often display security warnings for HTTP sites, affecting user trust.

- Certificate Requirements: To implement HTTPS, a website must obtain an SSL/TLS certificate from a recognized Certificate Authority (CA). This certificate is crucial for establishing a trusted and encrypted connection.

- Performance: While HTTPS used to be slower than HTTP due to the encryption process, advancements in technology have significantly reduced this performance gap.

Understanding the differences between HTTP and HTTPS is crucial for web developers and users alike. The choice between them can significantly impact website security, user trust, and search engine ranking.

Learn more:

Specifications:

References:

- HTTP response status codes on MDN

4.10 — Web Browsers

Web browsers are sophisticated software applications that play a crucial role in accessing and interacting with the World Wide Web. They serve as the interface between users and web content, rendering web pages and providing a seamless user experience. Here's a deeper look into their functionality and features:

Core Functions of Web Browsers:

- Rendering Web Content: Browsers interpret and display content written in HTML, CSS, and JavaScript. They process HTML for structure, CSS for presentation, and JavaScript for interactivity, converting them into the visual and interactive web pages.

- Request and Response Cycle: When a user requests a webpage, the browser sends this request to the server where the page is hosted. The server responds with the necessary files (HTML, CSS, JavaScript, images, etc.), which the browser then processes to render the page.

- Executing JavaScript: Modern browsers come with JavaScript engines that execute JavaScript code, enabling dynamic interactions on web pages, such as form validations, animations, and asynchronous data fetching.

How Browsers Work Behind the Scenes:

- Parsing: Browsers parse HTML, CSS, and JavaScript files to understand the structure, style, and behavior of the webpage.

- Rendering Engine: Each browser has a rendering engine that translates web content into what users see on their screen. This includes layout calculations, style computations, and painting the final visual output.

- Optimization: Modern browsers optimize performance through techniques like caching (storing copies of frequently accessed resources) and lazy loading (loading non-critical resources only when needed).

The Role of Browsers in Web Development:

- Cross-browser Compatibility: Developers must ensure that websites function correctly across different browsers, each with its quirks and rendering behaviors.

- Accessibility: They provide features that assist in making web content accessible to all users, including those with disabilities.

Learn more:

- Populating the page: how browsers work on MDN

- How browsers work on web.dev

Tools:

4.11 — JavaScript Engines

JavaScript engines, sometimes referred to as "JavaScript Virtual Machines" are specialized software components designed to process, compile, and execute JavaScript code. JavaScript, being a high-level, interpreted scripting language, requires an engine to convert it into executable code that a computer can understand. These engines are not just a part of web browsers but are also used in other contexts, like servers (Node.js uses the V8 engine).

Key Functions of JavaScript Engines:

- Parsing: The engine reads the raw JavaScript code, breaking it down into elements it can understand (tokens) and constructing a structure (Abstract Syntax Tree - AST) that represents the program's syntactic structure.

-

Compilation: Modern JavaScript engines use a

technique called Just-In-Time (JIT) compilation. This process

involves two stages in many engines:

- Baseline Compilation: Converts JavaScript into a simpler intermediate code quickly.

- Optimizing Compilation: Further compiles the code to a more optimized machine code, improving performance. The engine might de-optimize the code if certain assumptions are no longer valid.

- Execution: The compiled code is executed by the computer's processor.

- Optimization: During execution, the engine collects data to optimize the code's performance in real-time, often recompiling it for greater efficiency.

Major JavaScript Engines:

- V8 (Google Chrome, Node.js, Microsoft Edge): Known for its speed and efficiency, V8 compiles JavaScript directly to native machine code before executing it.

- SpiderMonkey (Mozilla Firefox): The first-ever JavaScript engine, it has evolved significantly, focusing on performance and scalability.

- JavaScriptCore (Safari): Also known as Nitro, it emphasizes efficient execution.

Learn more:

- JavaScript engine

- Bare Metal JavaScript: The JavaScript Virtual Machine from Frontend Masters

5. Core Competencies

This section identifies and defines the core competencies associated with being a front-end developer.

5.1 — Code Editors

Code editors are software tools used by developers to write and edit code. They are an essential part of a programmer's toolkit, designed to facilitate the process of coding by providing a convenient and efficient environment. Code editors can range from simple, lightweight programs to complex Integrated Development Environments (IDEs) with a wide array of features.

Key Characteristics of Code Editors:

- Syntax Highlighting: They highlight different parts of source code in various colors and fonts, improving readability and distinguishing code elements.

- Code Completion: Also known as IntelliSense or auto-completion, this feature suggests completions for partially typed strings.

- Error Detection: Many editors detect syntax errors in real-time, aiding in quick debugging.

- File and Project Management: Features for managing files and projects are often included, easing navigation in complex projects.

- Customization and Extensions: Most editors offer customization and support for extensions to add additional functionalities.

- Integrated Development Environment (IDE): Combines the features of a code editor with additional tools like debuggers and version control.

The choice of a code editor depends on factors such as programming language, project complexity, user interface preference, and required functionalities. Some developers prefer simple editors for quick edits, while others opt for robust IDEs for full-scale development. Code editors are indispensable in the software development process.

Learn more:

- Code/Text editors on MDN

Tools:

5.2 — HyperText Markup Language (HTML)

HTML, which stands for HyperText Markup Language, is the standard language used to create and design web pages. It's not a programming language like JavaScript; instead, it's a markup language that defines the structure and layout of a web page.

Here's a basic breakdown of how HTML works:

- Elements and Tags: HTML uses 'elements' to define different parts of a web page. Each element is enclosed in 'tags', which are written in angle brackets. For example, <p> is the opening tag for a paragraph and </p> is the closing tag. The content goes between these tags.

- Structure of a Document: An HTML document has a defined structure with a head (<head>) and a body (<body>). The head contains meta-information like the title of the page, while the body contains the actual content that's visible to users.

- Hierarchy and Nesting: Elements can be nested within each other to create a hierarchy. This nesting helps in organizing the content and defines parent-child relationships between elements.

- Attributes: Elements can have attributes that provide additional information about them. For example, the href attribute in an anchor (link) element (<a>) specifies the URL the link goes to.

-

Common Elements: Some common HTML elements

include:

- <h1> to <h6>: Heading elements, with <h1> being the highest level.

- <p>: Paragraph element.

- <a>: Anchor element for links.

- <img>: Image element.

- <ul>, <ol>, <li>: Unordered (bullets) and ordered (numbers) list elements.

Imagine HTML as the skeleton of a web page. It outlines the structure, but it doesn't deal with the visual styling (that's what CSS is for) or interactive functionality (JavaScript's domain). As a front-end engineer, you would use HTML in combination with CSS and JavaScript to build and style dynamic, interactive web pages.

Learn more:

- Guide to HTML on MDN

- Introduction to HTML (Part of the Free Bootcamp) from Frontend Masters

- Complete Intro to Web Development (HTML Section) from Frontend Masters

- Learn HTML on web.dev

Specifications:

References:

Tools:

5.3 — Cascading Style Sheets (CSS)

CSS, or Cascading Style Sheets, is a cornerstone style sheet language used in web development to describe the presentation of documents written in HTML. It empowers developers and designers to control the visual aesthetics of web pages, including layout, colors, fonts, and responsiveness to different screen sizes. Unlike HTML, which structures content, CSS focuses on how that content is displayed, enabling the separation of content and design for more efficient and flexible styling. The "cascading" aspect of CSS allows multiple style sheets to influence a single web page, with specific rules taking precedence over others, leading to a cohesive and visually engaging user experience across the web.

Imagine HTML as the skeleton of a web page—it defines where the headers, paragraphs, images, and other elements go. CSS is like the clothing and makeup—it determines how these elements look. Here's a breakdown:

- Selectors and Properties: In CSS, you write "rules" that target HTML elements. These rules specify how the elements should be styled. A CSS rule consists of a "selector" (which targets the HTML element) and a "property" (which styles it). For example, you can have a rule that targets all <p> (paragraph) elements and sets their text color to red.

- Cascading and Specificity: Styles are applied in order of specificity, with inline styles being the most specific, followed by ID, class, and tag selectors.

- Box Model: Everything in CSS is considered as a box, with properties like padding, borders, and margins. These properties define the space around and within each element, affecting layout and spacing.

- External, Internal, and Inline: CSS can be included externally in a .css file, internally in the HTML head, or inline within HTML elements.

- Responsive Design: CSS allows you to make web pages look good on different devices and screen sizes. This is often done using "media queries," which apply different styles based on the device's characteristics, like its width.

- Animation and Interaction: CSS isn't just about static styles. You can create animations, transitions, and hover effects, enhancing the interactivity and visual appeal of your web page.

Understanding CSS involves getting familiar with its syntax and rules, and then applying them to create visually appealing and functional web pages. As a front-end engineer, you'd often work closely with CSS, alongside HTML and JavaScript, to create the user-facing part of websites and applications.

Learn more:

- Guide to CSS on MDN

- Frontend Masters Introduction to CSS (Part of the Free Bootcamp) from Frontend Masters

- Complete Intro to Web Development (CSS Section) from Frontend Masters

- Getting Started with CSS from Frontend Masters

- Learn CSS on web.dev

Specifications:

References:

- cssreference.io

- css4-selectors.com

- CSS Reference on MDN

- CSS Selectors Reference on MDN

- What's next for CSS?

5.4 — JavaScript Programming Language (ECMAScript 262)

JavaScript, also known as ECMAScript, is a dynamic programming language crucial for web development. It works alongside HTML and CSS to create interactive web pages and is integral to most web applications.

Role in Web Development:

- JavaScript, along with HTML and CSS, is a foundational technology of the World Wide Web. It adds interactivity to web pages.

- It's primarily used for client-side scripting, running in the user's web browser to add interactive features.

Beyond Web Pages:

- With Node.js, JavaScript can also be used on the server-side, enabling full-scale web application development.

- Node.js also empowers developers to create command-line interface (CLI) tools using JavaScript. This expands the utility of JavaScript to include server management, automation tasks, and development tooling, all in a familiar language for web developers.

Key Features:

- JavaScript is event-driven, responding to user actions to make websites more dynamic.

- It supports asynchronous programming for tasks such as loading new data without reloading the entire page.

- It uses prototype-based object orientation, offering flexible inheritance patterns.

Learning Curve and Community:

- It's often recommended as a first programming language due to its beginner-friendly nature and immediate visual feedback in web browsers.

- JavaScript has a large developer community, providing abundant resources, tutorials, and documentation for learners.

JavaScript is a powerful programming language that's essential for web development. It's a versatile language that can be used for both front-end and back-end development, making it a must-learn for aspiring web developers.

Learn more:

- Guide to JavaScript on MDN

- Introduction to JavaScript (Part of the Free Bootcamp) from Frontend Masters

- JavaScript: From First Steps to Professional from Frontend Masters

- JavaScript Learning Path from Frontend Masters

- JavaScript Roadmap

Specification:

Reference:

- MDN JavaScript Reference on MDN

5.5 — Document Object Model (DOM)

The Document Object Model (DOM) is a fundamental programming interface for web documents that conceptualizes a webpage as a hierarchical tree of nodes, enabling dynamic interaction and manipulation. This model transforms each HTML element, attribute, and text snippet into an accessible object, allowing programming languages, particularly JavaScript, to effectively alter the page's structure, style, and content. The DOM's tree-like structure not only simplifies navigating and editing web documents but also facilitates real-time updates, event handling, and interaction, making it indispensable for creating responsive and interactive web applications.

Key Features:

-

Tree Structure: The DOM represents a web page as

a tree, with elements, attributes, and text as nodes. An HTML

document, for example, is a tree that includes nodes like

<html>,<head>, and<body>. - Manipulation: Programming languages, especially JavaScript, can manipulate the DOM. This allows for changes in HTML elements, attributes, and text, as well as adding or removing elements.

- Event Handling: The DOM handles events caused by user interactions or browser activities. It allows scripts to respond to these events through event handlers.

- Dynamic Changes: With the DOM, web pages can dynamically change content and structure without needing to reload, enabling interactive and dynamic web applications.

The DOM is a crucial part of web development, allowing for dynamic and interactive web pages. It's a powerful interface that's fundamental to the web and is supported by all modern web browsers.

Learn more:

- Introduction to the DOM on MDN

- DOM Enlightenment

- Vanilla JS: You Might Not Need a Framework on Frontend Masters

Specification:

Reference:

- MDN DOM interfaces on MDN

5.6 — TypeScript

TypeScript is an open-source programming language developed and maintained by Microsoft. It is a superset of JavaScript, which means that any valid JavaScript code is also valid TypeScript code. TypeScript adds optional static typing to JavaScript, among other features, enhancing the development experience, especially in larger or more complex codebases.

Key Features of TypeScript:

- Static Type Checking: TypeScript provides static type checking, allowing developers to define types for variables, function parameters, and return values. This helps catch errors and bugs during development, rather than at runtime.

- Type Inference: While TypeScript encourages explicit type annotations, it also has powerful type inference capabilities. This means that it can deduce types from the context, reducing the amount of type-related boilerplate code.

- Advanced Type System: TypeScript's type system includes features like generics, enums, tuples, and union/intersection types. These advanced features provide a robust framework for writing complex and well-structured code.

- Integration with JavaScript Libraries: TypeScript can be used with existing JavaScript libraries and frameworks. Type definitions for many popular libraries are available, allowing them to be used in a TypeScript project with the benefits of type checking.

- Tooling Support: TypeScript has excellent tooling support with integrated development environments (IDEs) and editors like Visual Studio Code. This includes features like autocompletion, navigation, and refactoring.

Advantages of Using TypeScript:

- Improved Code Quality and Maintainability: Static typing helps detect errors early in the development process, improving overall code quality.

- Easier Refactoring and Debugging: Types make it easier to refactor and debug code, as they provide more information about what the code is supposed to do.

- Better Developer Experience: Tooling support with autocompletion, code navigation, and documentation improves the developer experience.

- Scalability: TypeScript is well-suited for large codebases and teams, where its features can help manage complexity and ensure code consistency.

Considerations:

- Learning Curve: For developers not familiar with static typing, there is a learning curve to using TypeScript effectively.

- Compilation Step: The need to transpile TypeScript into JavaScript adds an extra step to the build process.

In summary, TypeScript enhances JavaScript by adding static typing and other useful features, making it a powerful choice for developing large-scale applications or projects where code maintainability is a priority. It's widely adopted in the front-end community, especially in projects where developers benefit from its robust type system and tooling support.

Learn more:

- TypeScript Handbook

- TypeScript 5+ Fundamentals, v4 from Frontend Masters

- TypeScript Learning Path from Frontend Masters

- Beginner's TypeScript

- The Concise TypeScript Book

- TypeScript Road Map

Tools

5.7 — JavaScript Web APIs (aka Web Browser APIs)

JavaScript Web Platform APIs are a collection of application programming interfaces (APIs) that are built into web browsers. They provide the building blocks for modern web applications, allowing developers to interact with the browser and the underlying operating system. These APIs enable web applications to perform various tasks that were traditionally only possible in native applications.

Key Categories and Examples:

-

Graphics and Media APIs: Graphics APIs like

Canvas and WebGL allow for rendering 2D and 3D graphics. Media

APIs enable playing and manipulating audio and video content, such

as the

HTMLMediaElementinterface and Web Audio API. - Communication APIs: Facilitate communication between different parts of a web application or between applications. Examples include WebSockets and the Fetch API.

- Device APIs: Provide access to the capabilities of the user's device, like the camera, microphone, GPS. Examples include the Geolocation API, Media Capture and Streams API, and the Battery Status API.

- Storage APIs: Allow web applications to store data locally on the user's device. Examples include the Local Storage API and IndexedDB.

- Service Workers and Offline APIs: Enable applications to work offline and improve performance by caching resources. Service Workers can intercept network requests and deliver push messages.

- Performance APIs: Help in measuring and optimizing the performance of web applications. Examples include the Navigation Timing API and the Performance Observer API.

Web Platform APIs have significantly expanded the capabilities of web applications, allowing them to be more interactive, responsive, and feature-rich. They enable developers to create applications that work across different platforms and devices without the need for native code, reducing development time and costs. The use of these APIs is fundamental in building modern web applications that provide user experiences comparable to native applications.

These APIs are standardized by bodies such as the World Wide Web Consortium (W3C) and the Web Hypertext Application Technology Working Group (WHATWG). Browser support for various APIs can vary.

Learn more:

- Introduction to web APIs on MDN

- List of JavaScript Web APIs (Specifications and Interfaces) on MDN

- The Web Platform: Browser technologies

- Browser APIs Learning Path from Frontend Masters

5.8 — JavaScript Object Notation (JSON)

JSON (JavaScript Object Notation) is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. It's a text-based format, consisting of name-value pairs and ordered lists of values, which is used extensively in web development and various other programming contexts. Here's a breakdown of its key characteristics:

- Lightweight Data Format: JSON is text-based, making it lightweight and suitable for data interchange.

- Human and Machine Readable: Its structure is simple and clear, making it readable by humans and easily parsed by machines.

- Language Independent: Despite its name, JSON is independent of JavaScript and can be used with many programming languages.

JSON's simplicity, efficiency, and wide support across programming languages have made it a fundamental tool in modern software development, particularly for web APIs, configuration management, and data interchange in distributed systems.

Learn more:

5.9 — ES Modules

ES Modules (ECMAScript Modules) are the official standard for modular JavaScript code. They provide a way to structure and organize JavaScript code efficiently for reuse.

Key Features of ES Modules:

-

Export and Import Syntax:

-

ES Modules allow developers to export functions, objects, or

primitives from a module so that they can be reused in other

JavaScript files. This is done using the

exportkeyword. -

Conversely, the

importkeyword is used to bring in these exports from other modules, creating a network of dependencies that are easy to trace and manage.

-

ES Modules allow developers to export functions, objects, or

primitives from a module so that they can be reused in other

JavaScript files. This is done using the

-

Modular Code Structure:

- By breaking down JavaScript code into smaller, modular files, ES Modules encourage a more organized coding structure. This modularization leads to improved code readability and maintainability, especially in large-scale applications.

-

Static Module Structure:

- ES Modules have a static structure, meaning imports and exports are defined at the top level of a module and cannot be dynamically changed at runtime. This static nature allows for efficient optimizations by JavaScript engines at compile-time, such as tree shaking (eliminating unused code).

-

Broad Compatibility:

- ES Modules are natively supported in modern web browsers and Node.js since version 12.17.0. They can also be used in older browsers and Node.js versions with the help of transpilers like Babel or bundlers like Rollup.js.

Learn more:

5.10 — Command Line

The command line is a vital tool for front-end developers, offering a text-based interface to efficiently interact with a computer's operating system. It is instrumental in modern web development workflows, particularly when working with Node.js and various front-end development tools. Known also as the terminal, shell, or command prompt, the command line allows developers to execute a range of commands for tasks such as running Node.js scripts, managing project dependencies, or initiating build processes.

Mastery of the command line enables front-end developers to leverage Node.js tools like npm (Node Package Manager) to install, update, and manage packages required in web projects. It also facilitates the use of build tools and task runners like Vite, which are essential for automating repetitive tasks like minification, compilation, and testing. Additionally, the command line provides direct access to version control systems like Git, enhancing workflow efficiency and collaboration in team environments.

While the command line may initially seem intimidating due to its lack of graphical interface, its potential for automating tasks and streamlining development processes makes it an invaluable skill for front-end developers.

Learn more:

- Command line crash course on MDN

- Complete Intro to Linux and the Command-Line from Frontend Masters

5.11 — Node.js

Node.js is an open-source, cross-platform JavaScript runtime environment that enables JavaScript to run on the server side, extending its capabilities beyond web browsers. It operates on an event-driven, non-blocking I/O model, making it efficient for data-intensive real-time applications that run across distributed devices.

Beyond its use in server-side development, Node.js also serves as a powerful tool in command line environments for various development tasks, such as running build processes, automating tasks, and managing project dependencies. Its integration with NPM (Node Package Manager) provides access to a vast repository of libraries and tools, enhancing its utility in the development ecosystem. This dual functionality as both a server framework and a command-line tool makes Node.js a versatile platform in the realm of web development.

- Runtime Environment: It provides a platform to execute JavaScript on servers and various back-end applications.

- Non-blocking I/O: Node.js operates on an event-driven, non-blocking I/O model, enabling efficient handling of multiple operations simultaneously.

- Use of JavaScript: It leverages JavaScript, allowing for consistent language use across both client-side and server-side scripts.

- NPM (Node Package Manager): Comes with a vast library ecosystem through NPM, facilitating the development of complex applications.

Node.js is a powerful tool in the web development ecosystem. It allows for the use of JavaScript on the server-side, enabling full-stack development in a single language. It also provides a robust command-line interface for various development tasks, making it a versatile platform for web developers.

Learn more:

- Introduction to Node.js

- Introduction to Node.js, v3 from Frontend Masters

- Node.js Learning Path from Frontend Masters

- Node.js Developer Road Map

5.12 — JavaScript Package Managers

JavaScript package managers are essential tools in modern web development, designed to streamline the management of project dependencies. These tools simplify the tasks of installing, updating, configuring, and removing JavaScript libraries and frameworks. By handling dependencies efficiently, package managers facilitate the seamless integration of third-party libraries and tools into development projects, ensuring that developers can focus on writing code rather than managing packages.

Among the most prominent JavaScript package managers are npm (Node Package Manager), Yarn, and pnpm. These package managers allow developers to access and install packages from the public npm registry, which hosts an extensive collection of open-source JavaScript packages, as well as from private registries, catering to both public and private project requirements.

Tools:

5.13 — NPM Registry

The npm registry is a pivotal resource in the JavaScript development community, functioning as an extensive public repository of open-source JavaScript packages. This vast database is integral for developers seeking to publish their own packages or to incorporate existing packages into their projects. The registry's diverse collection ranges from small utility functions to large frameworks, catering to a broad spectrum of development needs.

Serving as more than just a storage space for code, the npm registry is a hub of collaboration and innovation, fostering the sharing and evolution of JavaScript code worldwide. Its comprehensive nature simplifies the discovery and integration of packages, streamlining the development process. Developers can access and manage these packages using JavaScript package managers such as npm, which is bundled with Node.js, as well as other popular managers like Yarn and pnpm. These tools provide seamless interaction with the npm registry, enabling efficient package installation, version management, and dependency resolution.

The npm registry not only facilitates the reuse of code but also plays a crucial role in maintaining the consistency and compatibility of JavaScript projects across diverse environments. Its widespread adoption and the trust placed in it by the developer community underscore its significance as a cornerstone of JavaScript development.

Learn more:

Tools:

5.14 — Git

Git is a distributed version control system, widely used for tracking changes in source code during software development. It was created by Linus Torvalds in 2005 for the development of the Linux kernel. Git is designed to handle everything from small to very large projects with speed and efficiency.

Git is an essential tool in modern software development, enabling teams to collaborate effectively while maintaining a complete history of their work and changes. It is integral in handling code revisions and contributes significantly to the overall efficiency of the development process. Git can be integrated with various development tools and platforms. Overall, Git's powerful features make it a popular choice for both individual developers and large teams, streamlining the process of version control and code collaboration.

Learn more:

- Git's official site

- Git In-Depth from Frontend Masters

- Git and GitHub on MDN

Tools:

5.15 — Web Accessibility - WCAG & ARIA

The WCAG are a set of international standards developed to make the web more accessible to people with disabilities. They provide a framework for creating web content that is accessible to a wider range of people, including those with auditory, cognitive, neurological, physical, speech, and visual disabilities.

Key Elements of WCAG:

- Four Principles: WCAG is built on four foundational principles, stating that web content must be Perceivable (available through the senses), Operable (usable with a variety of devices and input methods), Understandable (easy to comprehend), and Robust (compatible with current and future technologies).

- Levels of Conformance: WCAG defines three levels of accessibility conformance - Level A (minimum level), Level AA (addresses the major and most common barriers), and Level AAA (the highest level of accessibility).

- Guidelines and Success Criteria: Each principle is broken down into guidelines, providing testable success criteria to help measure and achieve accessibility. These criteria are used as benchmarks to ensure websites and applications are accessible to as many users as possible.

ARIA is a set of attributes that define ways to make web content and web applications more accessible to people with disabilities. ARIA supplements HTML, helping to convey information about dynamic content and complex user interface elements developed with JavaScript, Ajax, HTML, and related technologies.

Role of ARIA in Accessibility:

- Enhancing Semantic HTML: ARIA attributes provide additional context to standard HTML elements, enhancing their meaning for assistive technologies like screen readers.

- Dynamic Content Accessibility: ARIA plays a crucial role in making dynamic content and advanced user interface controls developed with JavaScript accessible.

- Support for Custom Widgets: ARIA enables developers to create fully accessible custom widgets that are not available in standard HTML, ensuring that these custom elements are usable by people with disabilities.

WCAG and ARIA are essential tools in making the web accessible to people with disabilities. They provide a framework for developers to create accessible web content and applications, ensuring that everyone can use the web regardless of their abilities.

Learn more:

- Web Accessibility on MDN

- Learn Accessibility on web.dev

- Website Accessibility from Frontend Masters

- Web App Accessibility (feat. React) from Frontend Masters

5.16 — Web Images, Files Types, & Data URLS

In the realm of web development, images play a pivotal role in defining the aesthetics and enhancing user engagement on websites. They serve multiple functions, ranging from conveying key information and breaking up text to adding artistic elements that elevate the overall design. A deep understanding of the various image file types and their specific applications is crucial for optimizing performance and visual impact.

Common web image formats include JPEG, for high-quality photographs; PNG, which supports transparency and is ideal for graphics and logos; SVG for scalable vector graphics that maintain quality at any size; and GIF for simple animations. Each format comes with its own set of strengths and use cases, influencing factors such as load time and image clarity.

Additionally, Data URLs provide a unique way to embed images directly into HTML or CSS, converting them into a base64 encoded string. This technique can reduce HTTP requests and speed up page loads, particularly useful for small images and icons. However, it's important to use this method judiciously, as it can increase the size of HTML or CSS files.

The strategic use of images and understanding their formats and embedding techniques is essential in web development. It not only enhances the visual storytelling of a website but also contributes to its performance and user experience.

Learn more:

- Guide to Images in HTML on MDN

- Learn Images on web.dev

5.17 — Browser Developer Tools (DevTools)

Browser Developer Tools, commonly known as DevTools, are an indispensable suite integrated within major web browsers such as Google Chrome, Mozilla Firefox, Microsoft Edge, and Safari. These tools are tailored for developers, offering comprehensive insights and powerful functionalities to understand, test, and optimize web pages and web applications. DevTools bridge the gap between coding and user experience, allowing developers to peek under the hood of the browser's rendering and processing of their web pages. From debugging JavaScript to analyzing performance bottlenecks and network issues, DevTools are essential for modern web development.

Learn more:

- What are browser developer tools? on MDN

- Introduction to Dev Tools, v3 from Frontend Masters

6. Other Competencies & Paradigms

This section identifies and defines other potential competencies and paradigms associated with being a front-end developer.

6.1 — A/B Testing

A/B testing, also known as split testing, is a method used to compare two versions of a web page, app feature, or other product elements to determine which one performs better. It's a process particularly relevant for optimizing user experience and engagement on websites or applications.

The process involves the following steps:

- Hypothesis Formulation: Starting with a hypothesis about how a change could improve a specific metric.

- Creating Variations: Two versions are created - the original (A) and a variant (B).

- Randomized Experimentation: The audience is randomly divided into two groups for each version.

- Data Collection: Data on user behavior is collected for both versions.

- Analysis: Results of both versions are compared to determine the better performer.

- Conclusion: Deciding on the winning version based on the analysis.

- Implementation: The winning version is implemented for all users.

A/B testing allows for data-driven decision-making and is effective in refining user interfaces and experiences, leading to higher user satisfaction and better performance of web projects.

6.2 — AI-powered Coding Tools

AI-powered coding tools are software programs that use artificial intelligence (AI) and machine learning (ML) to assist developers in writing code. These tools are designed to improve developer productivity and efficiency by automating repetitive tasks and providing intelligent suggestions. They can be used for various purposes, such as code completion, refactoring, and debugging.

AI-powered coding tools are becoming increasingly popular in the developer community, with many integrated development environments (IDEs) and code editors incorporating them into their platforms. These tools are particularly useful for front-end developers, as they can help with tasks like writing HTML, CSS, and JavaScript code. They can also be used for more complex tasks like refactoring code or debugging.

AI-powered coding tools are still in their early stages, and their capabilities are limited. However, they have the potential to significantly improve developer productivity and efficiency in the future.

Learn more:

Tools:

6.3 — Adaptive Design

Adaptive design in web development refers to a strategy for creating web pages that work well on multiple devices with different screen sizes and resolutions. Unlike responsive design, which relies on fluid grids and flexible images to adapt the layout to the viewing environment dynamically, adaptive design typically involves designing multiple fixed layout sizes.

Here's a breakdown of key aspects of adaptive design:

- Multiple Fixed Layouts: Adaptive design involves creating several distinct layouts for multiple screen sizes. Typically, designers create layouts for desktop, tablet, and mobile views. Each layout is fixed and doesn't change once it's loaded.

- Device Detection: When a user visits the website, the server detects the type of device (e.g., desktop, tablet, mobile) and serves the appropriate layout. This detection is usually based on the device's screen size and sometimes other factors like the user agent.

-

Pros and Cons:

-

Pros:

- Optimized Performance: Since layouts are pre-designed for specific devices, they can be optimized for performance on those devices.

- Customization: Designers can tailor the user experience to each device more precisely.

-

Cons:

- More Work: Requires designing and maintaining multiple layouts.

- Less Fluidity: Doesn't cover as many devices as responsive design. New or uncommon screen sizes might not have an optimized layout.

-

Pros:

- Use Cases: Adaptive design is often chosen when there is a need for highly tailored designs for different devices, or when performance optimization for specific devices is a priority. It can be especially useful for complex sites where different devices require significantly different user interfaces.

In your work as a front-end engineer, incorporating adaptive design might involve using HTML and CSS to create different layouts, and JavaScript to detect devices and serve the appropriate layout. SolidJS, being a declarative JavaScript library, would be instrumental in managing the state and reactivity aspects of these different layouts.

6.4 — Algorithms

An algorithm is a step-by-step procedure or formula for solving a problem. In the context of web development and programming, it refers to a set of instructions that are designed to perform a specific task or to solve a specific problem. Algorithms are fundamental to all aspects of computer science and software engineering, including web development.

When developing websites or web applications, algorithms can be used for various purposes such as:

- Data Sorting and Searching: Algorithms can sort or search data efficiently. For instance, sorting algorithms like QuickSort or MergeSort can be used to organize data, and search algorithms like binary search can quickly find data in sorted lists.